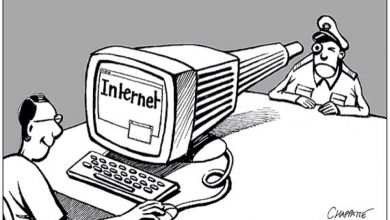

Attackers could exploit the vulnerabilities of Alexa and Google Home to phishing and spying on their users

SRLabs experts described a number of vulnerabilities in Alexa and Google Home, which Google developers have not been able to fix for several months. Attackers can use voice assistants to eavesdrop on conversations or with a trick received from users confidential information.

In essence, threat is possessing malware that develop third-parties.“The capability of the speakers can be extended by third-party developers through small apps. These smart speaker voice apps are called Skills for Alexa and Actions on Google Home. The apps currently create privacy issues: They can be abused to listen in on users or vish (voice-phish) their passwords”, — write SRLabs specialists.

Researchers themselves have created eight applications: four “skills” for Alexa and four “actions” for Google Home. All of them passed Amazon and Google security checks and masked themselves as simple horoscope verification applications, with the exception of one application masked as a random number generator. In fact, these applications secretly eavesdropped on users or tried to steal their passwords.

Phishing and spyware attack vectors are associated with the Amazon and Google backends that companies provide to application developers. Therefore, developers get access to functions that they can use to configure voice assistant commands and their responses. Experts found that by adding the sequence “�. ”(U + D801, period, space), you can cause long periods of silence during which the assistant, however, remains active.

The idea of the researchers was to inform the user of a fictitious application crash, adding to this “�. “. As a result, there will be a long pause, and after a few minutes, a new phishing message will be sent to the user, which will make the victim believe that this phishing message is in no way connected with the previous ones. For example, the horoscope application reports an error, but actually remains active, ultimately asking the user for a password from Amazon or Google, falsifying the update message.

In the video released by the researchers, you can notice that the blue Alexa status indicator remains active and does not turn off, which means that the previous application is still active and is still trying to deal with “�. “.

Read also: BSI considers Firefox the most secure browser

Additionally, the combination “�. “. Can be used to listen to users. In this case, the combination of characters is applied after the malicious application responded to the device owner’s command. This time “�. “. Is used to maintain device activity and record surrounding conversations that are stored in the logs and sent to the attacker server for processing.

In essence, the root of the problem is that Amazon and Google initially check applications for Alexa and Google Home, but do not check their subsequent updates.

Worse, SRLabs experts write that they notified both manufacturers about the problems at the beginning of this year, but they still haven’t done anything and have not banned the use of long pauses that can be created using “�. “.

Now that the expert report was released and the media became interested, Amazon and Google hastened to remove the malicious applications and said that they had already taken the necessary measures and intend to review the approval processes for “skills” and “actions” so that this would not happen again.

Google notes that now the company already prohibits and removes any “actions” that violate the rules, and also has mechanisms for identifying certain types of application behavior (similar to those described by researchers) and its intersection. Also, Amazon and Google emphasized that devices should not, under any circumstances, request user account passwords.

Using a new voice app should be approached with a similar level of caution as installing a new app on your smartphone.

To prevent ‘Smart Spies’ attacks, Amazon and Google need to implement better protection, starting with a more thorough review process of third-party Skills and Actions made available in their voice app stores. The voice app review needs to check explicitly for copies of built-in intents. Unpronounceable characters like “�. “ and silent SSML messages should be removed to prevent arbitrary long pauses in the speakers’ output. Suspicious output texts including “password“ deserve particular attention or should be disallowed completely.