Public demands from IT-giants control for the content that violates terror policy

In the first quarter 2019 Google specialists personally watched more than million videos that potentially violate YouTube terrorism policy.

Among the total number of viewed videos, about 90 000 really violated policy. It means that only 9% of clips were deleted.In letter to US House Committee on Homeland Security, Google said, that in the content checks are involved nearly 10 000 employees.

“Google spends hundreds of millions of dollars annually on reviewing content”, — said in the company

Technological giants as Google, Facebook, Twitter and Microsoft many times received requests to disclose budgets on fight with terrorism. Assessment as “hundreds of thousands dollars” from Google is the most precise answer that got the public. Facebook, for example, did not react on request.

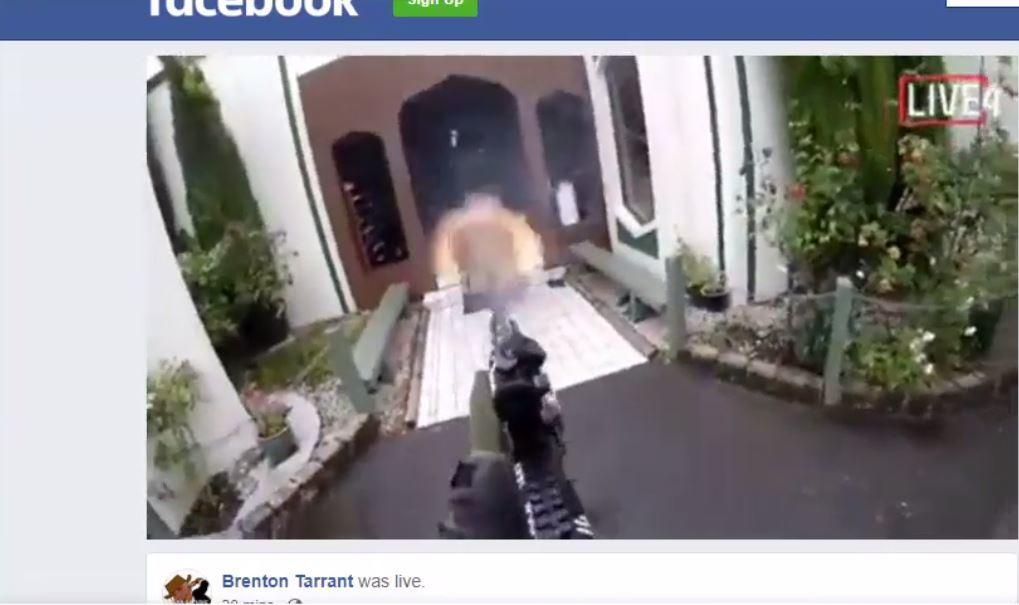

“The fact that some of the largest corporations in the world are unable to tell us what they are specifically doing to stop terrorist and extremist content is not acceptable, as we saw in New Zealand, Facebook failed and admitted as much”, — claimed in US House Committee.

In a separate letter to the US panel, Twitter said putting a dollar amount on efforts to combat terrorism is a “complex request”.

“We have now suspended more than 1.4 million accounts for violations related to the promotion of terrorism between August 1, 2015, and June 30, 2018,” — added Carlos Monje Jr., Twitter’s director of public policy and philanthropy.

Mass shooting in New Zealand this spring increased pressure on social networks’ platforms. Question of content monitoring sharpened. After the tragedy, videos with the incident were uploaded on YouTube every second. Regarding this, Australian authorities passed a law, according to which companies that manage social networks, are responsible for deletion of violent content.

EU also discusses a law that will demand deleting terrorists’ content in one hour after it was uploaded. To match this standard, Google needs more accurate system for detecting video, which violates policy on terrorism. Otherwise, for manual isolation of really dangerous videos among millions of others, they will need huge team and budget.

Source: https://www.dailypioneer.com