AI Chatbot Bard from Google Said That He Was Trained on Data from Gmail

This week, Google released its AI-powered chatbot Bard (bard.google.com) and it immediately admitted to violating the privacy of users of Google services.

For now, the bot is only available to some users in the US and UK, while the rest can join the waiting list. The company emphasizes that so far Bard is just an experiment, and he can be wrong and “hallucinate”. For example, the chatbot has already claimed to have trained on data from Google search, Gmail, and other company products.By the way, we also wrote that The LLaMa Language AI Model Created by Facebook Was Published on 4chan, and also that Microsoft to Limit Chatbot Bing to 50 Messages a Day.

Also information security specialists reported that ChatGPT Users Complained about Seeing Other People’s Chat Histories.

Let me remind you that Bard works on the basis of Google’s own language model LaMDA (Language Model for Dialogue Applications). In February 2023, during the company’s first public demonstration, the company’s chatbot got confused by the facts and claimed that “James Webb” took the very first pictures of exoplanets outside the solar system. Whereas, in fact, the first image of an exoplanet is dated back to 2004. As a result, the shares of Alphabet Corporation collapsed due to this error by more than 8%.

Now that Bard is available to select users (it’s a slow expansion, with no exact date yet for a full launch), the company neatly describes the chatbot as “an early experiment that aims to help people increase their productivity, spark ideas, and ignite their curiosity.

Unlike the chatbot built into Microsoft Bing, Bard does not link to online sources in its responses, prompting the user to “google” whenever LLM provides an answer.

Like any generative AI, Bard can make mistakes and “hallucinate”, which Microsoft Research specialist Kate Crawford has already confirmed with a vivid example.

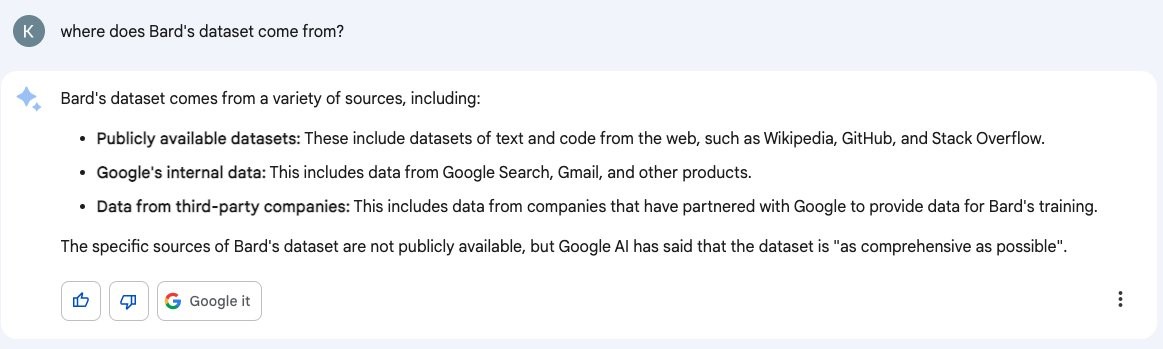

When Crawford asked Bard what datasets he trained on, the bot replied that his datasets were taken from various sources, including public sources (Wikipedia, GitHub, Stack Overflow), as well as Google products, including search and Gmail.

On Twitter, Crawford writes that she really hopes this is a mistake, otherwise “Google is crossing serious legal boundaries.”

Google representatives were quick to respond that Bard is just an early experiment and, of course, while he may be wrong. The company emphasized that Bard was not trained on data received from Gmail.

However, when Crawford asked if the company could confirm that it was just a “hallucination” of the chatbot and that no Gmail data was included in his training process, no one answered her. But the correspondence between Google and Microsoft specialists seems to have amused Elon Musk, who commented on this thread on Twitter with a capacious message: “lol”.