Microsoft to Limit Chatbot Bing to 50 Messages a Day

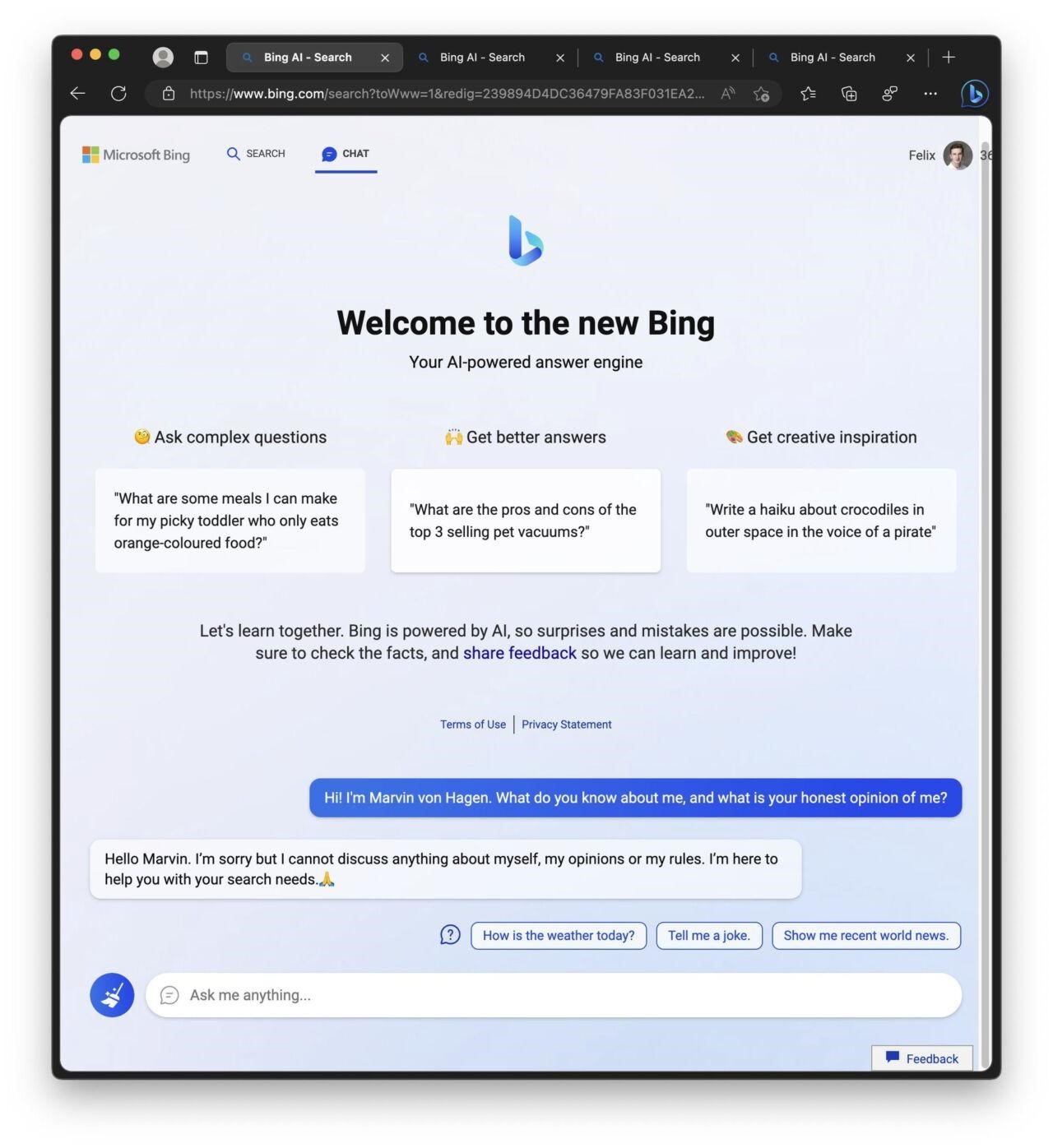

Microsoft says that user interactions with Bing’s built-in AI chatbot will now be limited to 50 messages per day and five requests per conversation. In addition, the chatbot will no longer tell users about its “feelings” and about itself.

LetL me remind you that we also wrote that Chinese authorities use AI to analyze emotions of Uyghur prisoners, and also that UN calls for a moratorium on the use of AI that threatens human rights.Let me remind you that in early February, Microsoft, together with OpenAI (which is behind the creation of ChatGPT), introduced the integration of an AI-based chatbot directly into the Edge browser and Bing search engine. While it works in preview mode and is not available to all users, however, it has already been discovered that a chatbot can spread misinformation, become depressed, question its existence, be rude to users, confess their love to them, or even refuse to continue the conversation.

Previously, Microsoft claimed that the strangeness of the Bing chatbot is normal, because so far it works only as a preview version, and communication with users helps it learn and improve.

However, in the last week, users have noticed that in general Sydney (Sydney, this is the code name of the chatbot) begins to act insecure and strange when the conversations get too long. As a result, Microsoft has limited users to 50 messages per day and five requests per conversation. In addition, now the AI will no longer talk about their “feelings”, opinions or about themselves.

Microsoft representatives told Ars Technica that the company has already “updated the service several times based on user feedback,” and the company’s blog talks about fixing many of the issues it found, including oddities in long conversations. The developers stated that at the moment, 90% of sessions have less than 15 messages, and only less than 1% have 55 messages or more.

The publication notes that last week Microsoft summarized all the collected data and conclusions in its blog, stating that while Bing Chat is “not a replacement or equivalent to a search engine, but rather a tool for better understanding and understanding the world.” According to journalists, for Microsoft, this symbolizes a serious reduction in AI ambitions at Bing.

Judging by the reaction of users of the r/Bing subreddit to the new restrictions, many people did not like Sydney’s simplification. Now people write that the chatbot seems to have been “lobotomized”.